Labs/Ubiquity/Usability/Usability Testing/Fall 08 1.2 Tests

Kris graciously made this Ubiquity command that allows you to select any time code and jump to that point in time.

Objectives

Begin probing how to make Ubiquity accessible and useful in the context of including Ubiquity with the mainline Firefox distribution. As this is an early alpha I will be focusing on the core Ubiquity interface, while logging bugs on separate commands.

Methodology

See main article Methodology

This largely is an exploratory, qualitative test exploring what users do when presented with Ubiquity for the first time. It is based on the interview format where the participants lead which tasks they perform next.

Analyzing Data

These tables are being pushed out before I have had a chance to have someone verify them and link them to the Trac DB. They are subject to changes, revisions, and mistakes. This is a wiki, so you can help out ; )

| |

|

|

|

|

|

| |

| Gives up (Discovery) | 5 | 3 | 4, 6, 9, 10, 11 | Discovery | Users who gave up on finding the hotkey combo. | 402, 440 | |

| Finds Hotkey | 3 | 0 | 3, 5, 8. | Discovery | Anywhere from 1-9 min. | ||

| Notices Hotkey Setting Field | 2 | 1 | 5, 8, | Learnability | #5 Didn't know what it was (and his two guesses were wrong) and 8 found it by accident, and changed it by accident. #7 accidentally changed the setting. | ||

| Changes hot key accidentally. | 2 | 3 | 7, 8 | 545 | |||

| Problems with command structure | 1 | 2 | 10 | Learnability | I.e. the need for modifiers etc | ||

| Confused by Jargon | 5 | 1.5 | 6, 7, 11 | Learnability | Wiki, hotkey, mashup, etc. See Demonstrating Value | 547 | |

| Confused by mozilla wiki | 2 | 2 | 6, 8 | Learnability | We should move the help to something other than this Wiki. | 402 | |

| Tries Wikipedia for help | 2 | 1 | 9, 5 | Learnability | The "Help system" includes Wikipedia and Google. | ||

| URL instead of email command | 3 | 2 | 11,4,9 | Learnability | Lead for stat analysis, synonym common email URL's w/ email command. | 572 | |

| Tries Right Click | 1 | 1 | 9 | Discovery | Contextual menus have discovery issues, the one tester used it only because he read it in the tutorial. He never did open Ubiquity either.

(link to other studies on contextual menus) |

||

| Tries watching video | 6 | 6, 7, 8, 9, 10, 11 | Learnability, Value | ||||

| Video won't load | 3 | 2 | 6, 11, 10 | Learnability | External validity of this data is poor because all users were using the same wifi connection (ie not a random sample) | 547 | |

| Other video problems | 1 | 1.5 | 7 | Video is buzzword laden | 547 | ||

| Video volume | 2 | 2 | 7, 10 | Sound | 547 |

Commands

commandname- number of unsuccessful attempts total

commandname+ number of successful testers total

If a command was executed subsequent successful attempts don't tell us anything. If someone screws up the total number of attempts and separate issues are counted. Think of it this way, there are an infinite number of ways to crash a plane but only one right way to land one.

Remember, these numbers (as is with all usability stats) are to bring a level of objectivity to what is inherently subjective observations.

| |

|

|

|

|

|---|---|---|---|---|

| Email- | 5 | 9, 10, 11 | Multiple issues, one being that users don't understand the need for modifiers ("to janedoe@gmail.com" or "email this"). Another being that users try typing in the URL of their service provider (mail.yahoo.com)- when that failed they assumed it didn't work with their email service provider. Finally, the email command is just very buggy. | 572 574 |

| Email+ | 3 | 5, 6, 7 | ||

| Map- | 3 | 8, 9, 10 | Somewhat invalid as the errors are due to discovery problems with Ub itself. All participants cruised to Google Maps instead of using the command, provide contextual reminders/clue on Google Maps itself? | |

| Map+ | 4 | 7, 8, 10, 11 | ||

| Wiki- | 0 | |||

| Wiki+ | 4 | 6, 7, 11, 10 | ||

| Weather- | 0 | |||

| Weather+ | 3 | 5, 10, 11 | ||

| Define- | 1 | 5 | Sudo did not show up in Define.com. Fallback dictionaries (urban, wiktionary, Google's define:, etc) would be a smart idea. | 404 |

| Define+ | 3 | 5, 7, 10 | ||

| Translate- | 2 | 10 | This caused more confusion than is reflected here. Executing it was not the problem, users didn't expect it to change the text on the page. | 54 |

| Translate+ | 2 | 5, 8 | ||

| Help- | 1 | 10 | Couldn't guess the command correctly. | |

| Map-insert- | 6 | 5, 6, 7, 8 | Requiring the user to click on the map is "counter intuitive." | 542 |

| Map-insert+ | 2 | 5 | ||

| Yelp- | ||||

| Yelp+ | 1 | 8 | ||

| Google+ | 2 | 6, 7 |

Synonyms & new command suggestions

The email command received the most amount of trouble, especially when chained with inserting a map. Some hotkeys tried were just "control E" or typing the url into the command line i.e. email.yahoo.com. Tester 8 and 10 were prolific guessers.

- Lyrics 14:09

- Yelp insert- 21:14

- Find 18:44

- Locate 19:10

- Help add command 23:37

- New command 23:39

- Gadgets-Command list

- Find gadgets-Command list

- Update-Command list

- What's new-Command list

- Add command-Command list

- Stockprices

- -Stock Prices

- Ticker--Stock Prices

- Zipcode -Map

- Directions-Map

- Closest ie Closest japanese restaurant

- “map home” modifier of map command for location.

What really jumps out at me here is yelp insert and help add command. Should insert be a universal modifier? And help add command suggests that the help structure for commands would be centered around a command entry, like in Enso.

Demographics

The aggregate demographics of the participants are uninteresting. The reasoning for demographics was to provide insight to user behavior and thus the demographics and feedback of the tester are available on each session page.

The accuracy of the data is questionable. There is sampling bias, the previous survey our was modeled on was done at random over the web whereas these participants had someone next to them, creating social pressures. Test Pilot is probably the best value for studies that require statistically accurate sampling.

A couple testers commented that they worked full time using computers, so their answers should have been 40+ hours per week, but none marked it as such. Overall, was an even distribution from the "1-5 hours" all the way to "21-40 hours"

None of the users deemed themselves as power users, which could be part of the sudo-anonymity online forms provide. Half labeled themselves as "modest/beginner" one was "illiterate" and the rest considered themelves "experienced." I also feel that these terms are loaded and innapropriate for a survey, but they are what the previous study used.

Firefox usage history could have been disturbed by social pressures as well, although these matched well with the previous study. One was not a Firefox user, two had "Less than 3 months" experience, one had used Firefox for "1-2 years", and six had used firefox "Over 2 years".

The reliable statistics are OS and web service usage as there was social judgment about these questions. Seven used Windows, two were Linux users, and one OS X user. Everyone used email, Wikipedia, and a map/directions provider. Eight used Gmail and half had used a translation service.

A PDF of the demographics with pretty graphs is here, a zip of the spreadsheet in numerous formats is here.

Feedback

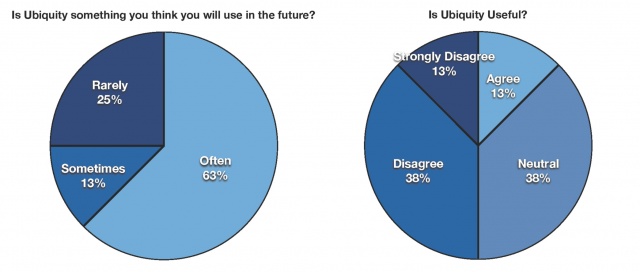

The feedback has internal consistency issues. While the majority of users said they would use the product the majority didn't think Ubiquity was very useful. Furthermore the facilitator's impression is most were frustrated Ubiquity. None of the participants emailed asking if they still use Ubiquity responded back- this is another negative sign.

Because of the sample size it's tough to draw solid conclusions from the data when the variables do not correlate.

As Dana Chisnell commented, "I wonder if what this says is that your participants thought it's an interesting idea, it could be developed more, but there isn't a lot of immediate value because it doesn't actually make their tasks easier."

Is there something that the help is lacking?

Some participants chose not to leave feedback, 3 and 4 did not have those questions on the test.

- 5 Break into multiple pages, not just one big scrolling adventure.

- 6 Better images, less words. Working movie.

- 7 No phone numbers to call, video is a bit dry, web page could be spruced up a bit visually

- 8 Easier searches, maybe a help that brings up lists of help options like in ubiquity - or have help directly in the ubiquity window so you don't have to stop browsing to find out how to do something.

- 10 If it needs help, it;s not psychically reading my mind enough. I've never used help for any other software.

Tester 10 has a good point, even if it is a bit juvenile. It is something that every usability professional told me when I asked for advice: if the user needs the help section (however good that section is) you have already failed.

Suggestions, thoughts, Kudos

- 5 Would like some sort of window persistence / docking functionality. Inserting non-native content onto any page you like (map insert) might be a bit presumptuous.

-The inserting of content comment was concerning copyright issues.

- 6 It might be hard for people to learn how to use the tool without extra help. It is confusing for people who don't know how to program or use computer at a high level

- 7 a very interesting addition to mozilla browser. It seems to make browsing/searching/emailing much more efficient.

- 8 Make commands easier to edit, or have ubiquity understand what you're trying to say. Editing and adding new commands within the ubiquity window.

- 10 no one is ever gonna get using ctrl + space to pull it up. Please make it part of the awesome bar or a bar that is on all the time.

Insights

Everyone takes away something different from each session and those understanding really contribute a lot to our overall understanding. Please contribute to this section heavily.

Discovery

Skipping the hot-key information transcended across user competency levels, of the two who didn't discover it by accident one rated himself computer "illiterate" and the other "experienced." Their mutual key to success was reading the documentation carefully.

Discovery will be enhanced by placing Ubiquity into the URL bar.

Marketing and the possible use of contextual notifications on screen would help to expose users to Ubiquity and it's interface outside of the browser experience.

Learnability

The tutorial saw a lot of skipping around (Trac #404), which tutorials are not supposed to accommodate. As Tester 8 put it, "[reading from the intro page]'Read the ubiquity tutorial' -that sounds boring." Moving towards an Enso-like approach to help (where each command page has instructions) would be beneficial. Enso's help system has undergone usability testing.

The video was also subject to skipping around too. However, scrubbing through long video is too clumsy to be used as a reference. An intro video and shorter, command oriented videos might prove beneficial.

It's hard to gauge how long people stayed tuned in to the screencast. They would often experiment and then come back. It would be good to get some analysis with a little JS to track how long our current user-base stay on the page/tab after clicking play. Stay tuned for some [www.indolering.com blog posts] on thoughts concerning video.

Demonstrating Value (marketing)

There were issues with mental models, namely the need for modifiers and dealing with Ubiquity as a dumb robot instead of a Turning test passable AI.

One angle of attack is to consider how to properly shape the users mental model. The buzzword heavy video was confusing many participants and I believe it also brought a level of expectation that was impossible to meet.

Even after the participants had learned to use Ub they didn't really find it all that useful, nor do they appear to have continued using Ubiquity after the tests.

Slashdot considers Ubiquity (or rather "natural language interfaces") bloat. Granted this IS /. but tech fanboys are the base that seeded FF and taught others to use it.

Proper marketing should teach the user how to use the unfamiliar interface, and the interface should either match or exceed those expectations.

Explicit control of mental framing is what got Bush and Obama elected. While framing and marketing is a deep issue, my take-away from the studies is that control of the message is essential as to not scare users off, not give false impressions, and convince users that Ubiquity is worth learning before they interact with Ubiquity.

Continual Improvement

TPS continual development cycle turns everything into a scientific experiment, coming up with theories, hypotheses, testing, and analysis to make exacting decisions based upon those changes. Participants have high expectations of Ubiquity to get things just right. If a problem prevents a user from using a command they are unlikely to try again. While we do our best to anticipate those expectations and problems we can't get them all. So how do we catch these errors and fix them? A major learning experience was gained from working with the Ubiquity development team and its support structures. UI Engineers are a part of the development workflow, so usability study final reports generally provide recommendations for improvements in the development workflow in addition to UI changes. From a background in radically custom manufacturing, the implications of Lean/Agile processes jump out. I want to share a piece of that here, specifically Kaizen.

Kaizen is business logic that is largely borrowed from the Toyota Production System. In short, it is a way of structuring improvement strategies in a workflow or organization in a cyclical manner. While Kaizen or continual improvement can seem obvious, it is the implementation that is so interesting. Toyota frames every problem through the scientific method, meticulously documenting every step in a process, framing hypotheses for improvement, experimenting and measuring results. While we can get away with sudo-implementations of documenting the workflow and measuring results, vast improvements can be made as information becomes impersonal and assumptions are continually assaulted.

It's best to provide an example in an obvious area where continual improvement can help, like Ubiquity commands. Data analysis admins can identify large problem areas (many failed commands, for example) and alert usability engineers. The UI engineers perform further analysis and come up with recommendations. UI engineers then communicate with programming engineers who help to implement changes. The UI engineers then communicate the changes and anticipated data changes to the data analysis people and wait for the results. Repeat.

Google has similar processes; a particularly tidy example of Lean in software development was given by Jen Fitzpatrick about the Google Spellchecker.

It's ~18:00 in. <video type="googlevideo" id="-6459171443654125383" width="640" height="480" desc="Original video" frame="true" position="center"/>

Streaming UI

Our position is uniquely suited to take advantage of Kaizen techniques because:

- We have a huge user-base

- Ubiquity could eventually support streaming UIs (core commands and css updated via subscription feeds)

Anyone remotely familiar with web development should have noticed the huge impact analytics has had on web and UI development. However, companies who have aligned UI engineering and backend development with Kaizen reap rewards on a different plane:

"We don't assume anything works and we don't like to make predictions without real-world tests. Predictions color our thinking. So, we continually make this up as we go along, keeping what works and throwing away what doesn't. We've found that about 90% of it doesn't work." -Lead designer at Netflix

Netflix makes major changes every 2 weeks, and most of them fail! We make changes every 2 months, and if 90% of those are failing.... Granted, the Netflix team has a very well fleshed out UI so they are guaranteed to have a higher failure rate but their rapid development cycle allows them to challenge previous assumptions and scour out problems.

UI Triangulation

See main article UI Triangulation

Measuring the impacts of changes to our UI is trickier for us. Google and Netflix just examine their server logs, but we can't (and won't) log every keystroke. But that's okay, if you watched all of the above Google Talk video you will notice that Jen mentions how they have tied in their user support staff and technical engineers. That's something the UI world calls Triangulation. Reports come in many forms, Test Pilot there we also get information flows from Get Satisfaction, Google Groups, Bugzilla, Trac, Moz Quality control, etc

Separately, all these places have their purpose, but by connecting these information flows or creating one piece flow (another TPS principal) you can triangulate problems while simultaniously increasing the value of participation to all systems. In short, problems can be identified easier if all the vectors for data are aligned with one another, like communicating GSFN complaints severity and frequency in Trac. This goes for non-UI related problems as well, it's vital for the web of trust.

By combining all of these sources for information we can get a very accurate picture of things. By analogy, think about how tainted political polling data pools are: people with landline phones who happen to be home and are willing to spend 10-30 minutes to answering questions. But by combining polls and weighting based on historical averages Pollyvote predicted the 2008 US presidential election's popular vote to within a single percentage point.

To get started it would be smart if we started baking these into our collection mechanism and work flows so that they sync with other systems autonomously or make it very fast for people to do manually. A fully connected flow would be to combine GSFN (our customer facing solution) with Trac (our developer solution). BUT that would lead to too much clutter in the Trac database, and those problems would have chunked and worked out first.

If we frame everything is a scientific test (as Kaizen does) we can know when to stop. Depending on how much value any single step would provides and the drawbacks determines the amount of resources spent on it. For example it may start out with putting the GSFN box on the download page, improving manual syncing, then work on manually connecting the RSS feeds to specific Track tickets, later adding script to update GSFN when a correlating ticket on Trac closes, eventually automatic importation of only certain ticket types, and so on.

Sessions

Tests 1 & 2 were dummy tests, 3 & 4 were lost due to encoding errors.