Socorro:Hadoop

Mapper to PyProc communication

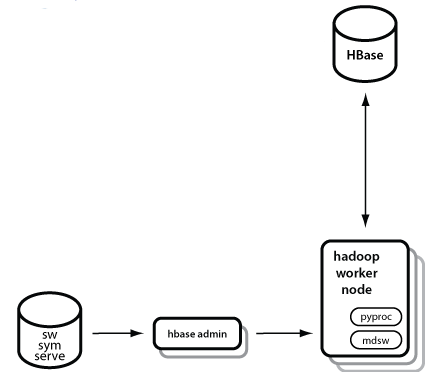

Because we will have N map tasks running per node, we need to ensure that they are not blocked on communicating to a single PyProc.

We'll need the PyProc daemon to use a worker pool. It will spin up N workers and hand off crash reports submitted by the map tasks to waiting workers.

Each worker will need its own mdsw to communicate with as well. Rather than requesting further changes to mdsw to make it operate in a worker pool fashion, it would probably be easiest to have each PyProc worker start a dedicated mdsw that it will communicate with.

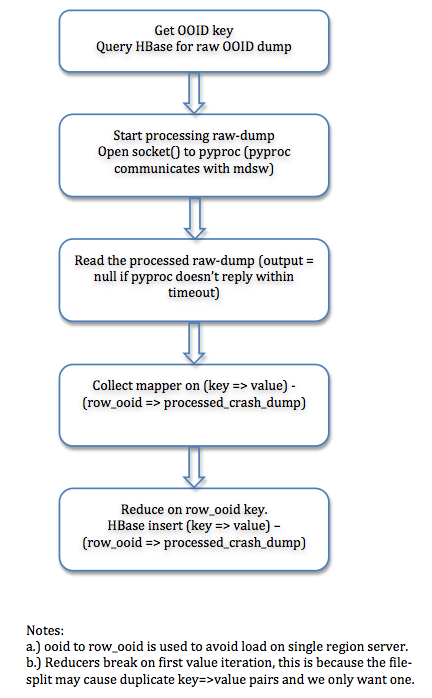

Hadoop Flowchart

Each hadoop job reads list of ooid's, splits and passes the ooid's to mapper.

Mapper invokes a socket connection and sends a request to pyproc to process raw-dumps.

Raw-dumps are then collected by mapper and sent to the reducer.

Reducer inserts the raw dump and certain processed columns in Hbase

Open questions

- Is there a python library to provide this worker pool design?

- If an mdsw or a PyProc worker malfunctions, how can we ensure both entities get cleaned up? (i.e. don't leave hundreds of mdsw orphans about)

- If N = 8, we would have over 24 active threads during a processing job. That said, for each set of three threads (map, pyproc worker, mdsw), only one will be needing CPU at any point in time). We'll need to determine if this is too much load on the node. Especially since the cluster will need to be running other types of MR jobs as well.

- What steps do we take if PyProc returns a large number of timeouts for a given job; alert types - send email alert, stop the hadoop job, etc. Also, what's the timeout magic number that invokes the alert action?