Necko/The Necko Story

Necko introduction Story written 2019-04-11

Long long ago there was a browser called Firefox. And it was running only as a single process. Inside it, there always has been - for all those (10+) years - the Necko core. It had two threads - the main one and the socket polling one. It was living under the rule of The Gecko, and as the time was passing Necko was growing and growing and growing...

Yeah, Necko is old and big.

Before you read on, you should already know what an ‘interface’ (in an .idl file) and ‘ipc protocol’ (in an .ipdl file) mean in the Mozilla world.

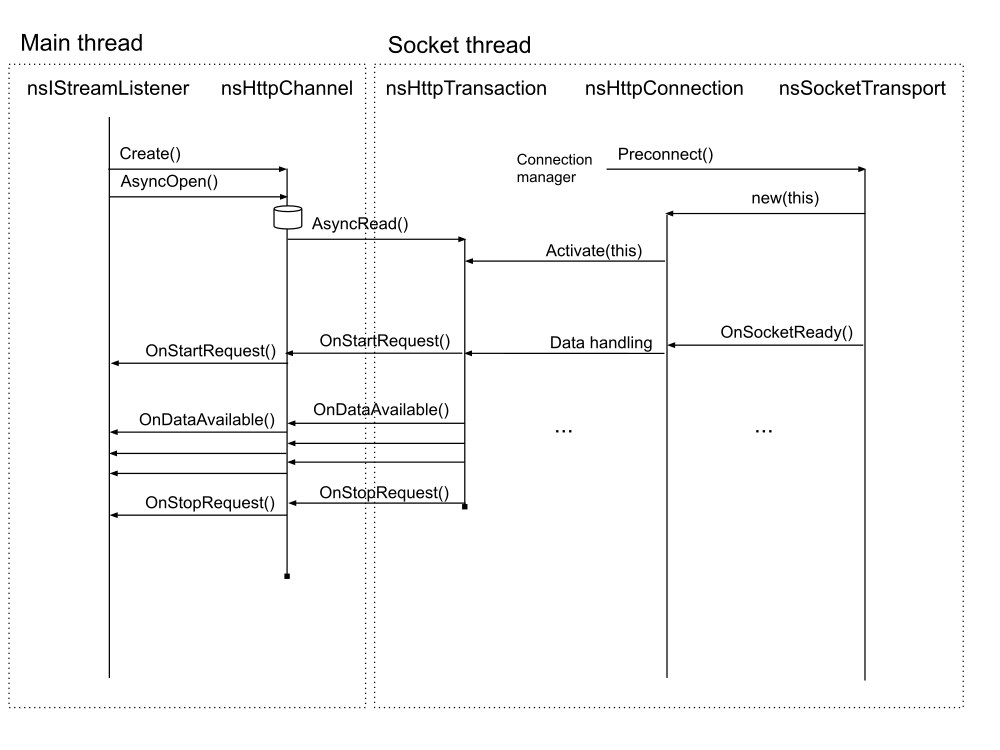

Let’s start with some essentials on how Necko and Gecko communicate. When Firefox navigates to a web page or when an already loading web page wants to load a subresource, Gecko creates and then opens a ‘channel’ object constructed using the URL. The channel is constructed by a ‘handler’ mapped to the schema of the URL. When you have the channel in your hands, you call the ‘asyncOpen(listener)’ method on it, which starts the load. Status and data is delivered asynchronously to the ‘listener’. It implements three callback methods: onStartRequest(), onDataAvailable(data), onStopRequest(status). The listener is the consumer of the data - being it an HTML parser, an image decoder, a CSS or JS loader. This is the very essence - the basic set of interfaces - of how Necko and Gecko talk to each other.

I will start with how the main core of our HTTP implementation works, more or less how this was written years ago. It still exists in the tree in not much changed form. Multiprocessing is being added incrementally as more or less transparent layers above and between.

For the http:// schema the implementation of a ‘channel’ is nsHttpChannel, with ~10000 lines of code. It lives and operates on the main thread only now. It’s designed to process only one request, its URL is not changing during the lifetime of the channel.

The channel handles the server response, like 200, but also redirects, authentication to proxies and servers, revalidation of cached content and storing content to the HTTP cache, cookies.

The channel does a few things before it decides to go to the network. Determines if the URL is from a tracking domain, resolves proxy for the URL, consults HTTP cache for an existing entry and examines its status, adds cookies and cached authorization headers. The opening process overall is very complicated and quite asynchronous. There is also Service Workers interception and number of other small tweaks and hooks.

When we decide to go to the network eventually, the channel creates a new object - a ‘transaction’, nsHttpTransaction. This object is a bridge between the main thread and the socket thread. It represents a single request. This object can be queued if there is no HTTP/1 connection available (due to parallelism limits) or no existing HTTP/2 session to dispatch on. nsHttpTransaction is responsible for building the request head with headers and sending it, including any possible POST data, on the given connection or session. Then it does initial parsing of the response head with headers as it comes from the server, does correct data framing and chunk decoding, so it’s transparent for nsHttpChannel to consume the response.

The actual connection with the server is handled by a yet different object, nsHttpConnection. In an HTTP/1 world this represents one physical TCP/TLS connection, presumably keep-alive, with the end server or a proxy. A ‘connection’ can live relatively long and can be reused for many transactions. Transactions are dispatched on a connection, one by one - we don’t support HTTP/1.1 pipelining. For HTTP/2, there is a layer added between transactions and a connection. Each transaction is served by an HTTP/2 stream (1:1), which is part of (N:1) an HTTP/2 session, which is using (1:1) an HTTP connection. HTTP/2 is transparent for transactions and channels.

Note that for HTTP/2 we use connection coalescing when two different hosts share a name in the certificate’s Alternative Common Name list.

The connection has yet another object beneath: a ‘socket’, nsSocketTransport. It encapsulates a TCP socket, optionally equipped (transparently layer over) with TLS. It lives only on the socket thread. This thread does both polling of all active sockets as well as event handling, when messages are sent to this thread. We use a local loopback socket pair added to the list of sockets we poll to wake the thread up.

A flow chart of this very core looks like this:

And now, let’s look at Electrolysis (e10s), which is our code name for process separation in Firefox. We made the interprocess split between the channel and the listener. Actual consuming listeners live on ‘child’ or also called ‘content’ processes. nsHttpChannel remains on the ‘parent’ (also ‘master’, ‘main’) process. We have an implementation for a ‘channel’ specific to child processes. When content loads a resource we use that child implementation - HttpChannelChild. It then opens an IPC messaging pipe. On the parent process there is HttpChannelParentListener object which serves as an opaque ‘listener’ to nsHttpChannel. HttpChannelParent is the parent side of the IPC protocol talking to HttpChannelChild. HttpChannelParent drives creation of nsHttpChannel and HttpChannelParentListener. There is a one-to-one relationship between HttpChannelParent and nsHttpChannel. HttpChannelParentListener lives longer when we are handling redirects, as a redirect creates a new nsHttpChannel for loading the target URL (and with it new HttpChannelChild/Parent pair is created.)

To make it even more complicated, it’s been decided to also separate the parts doing actual networking to yet another process. This is in progress at the time of writing this doc (the Socket Process Isolation project). Here the split is made between nsHttpChannel and nsHttpTransaction. Approach is somewhat similar to how content listeners are separated from channels. There is HttpTransactionParent and Child, each side related one-to-one to its nsHttpChannel (on the parent process) and nsHttpTransaction (on the socket process) respectively.

To close our story with some happy ending - we have a powerful tool to look inside this machinery at run-time. We call it logging. It has to be manually enabled, then it writes log files recording all instances of all objects and actions they went through and when. Logs are something we often ask for from users in the outside world to help us diagnose problems specific to their setup or use case. In a high percentage of cases we are able to find the problem cause from the logs, or prove that users do something wrong. Logging is very important for us.