Gecko:CrossProcessLayers

For context, see Gecko:Layers

Contents

Proposal

- Have a dedicated "GPU Process" responsible for all access to the GPU via D2D/D3D/OpenGL

- This process can be killed and restarted to fix leaks or cover for other driver bugs

- This process would be privileged, allowing content processes to be sandboxed but still use the GPU remotely

- In some configurations the GPU process could be a set of threads in a regular browser process (the master process)

- Browser processes (content and chrome) maintain a layer tree on their main threads

- Layer tree is maintained by layout code

- Each transaction that updates a layer tree pushes a set of changes over to a "shadow layer tree"

- This shadow layer tree is what we use for rendering off the main thread

- This is necessary for layer-based animation to work without being blocked by the main thread

- Since we're pushing changes across threads anyway, we might as well push them across process boundaries at the same time, so push them all the way to the GPU process

- Therefore, the GPU process maintains a set of shadow layer trees and is responsible for compositing them

- Compositing a shadow layer tree either results in a buffer that's rendered into a window, or a buffer that is later composited into some other layer tree

- We can reduce VRAM usage at the expense of increased recomposition work by recompositing a content process layer tree every time we composite its parent layer tree and not having a persistent intermediate buffer

- We can control the scheduling of content layer tree composition

This proposal lets us use a generic remoting layer backend. Hardware/platform specific backends are isolated to the GPU process and do not need to do their own remoting.

Implementation Steps

The immediate need is to get something working for Fennec. Proposal:

- Initially, let the GPU process be a thread in the master process

- Build the remoting layers backend

- Publish changes from the content process layer trees and the master process chrome layer tree to shadow trees managed by the GPU thread

Start with bare-bones test application including important use cases. No tile manager. Get remote layers working. Look into best approach for layers tile manager; maybe a new TiledContainer that does caching internally. Not clear whether browser or content process should own this.

Implementation Details for Fennec

- Key question: what cairo backend do we use to draw into ThebesLayers?

- Image backend?

- Allocate shared system memory or bc-cat buffers for the regions of ThebesLayers to update

- Gecko processes draw into those areas using cairo image backend

- GL backend uploads textures from system memory or acquires texture handle for bc-cat buffer across processes (e.g. using texture_to_pixmap); composites those changes into its ThebesLayer buffers

- Windowless plugins suffer, except for Flash where we have NPP_DrawImage and can use layers to composite those images together

- GTK theme rendering suffers

- do we care?

- Xlib backend?

- Allocate textures for changed ThebesLayer areas and map to pixmap using texture_to_pixmap

- Gecko processes draw into those pixmaps using cairo Xlib backend

- GL backend acquires texture handle across processes; composites those changes into its ThebesLayer buffers

- Maybe we can have some XShm or bc-cat hack that lets us do it all ... an X pixmap backed that we can also poke directly through shared memory that's also a texture!

- Image backend?

Future Details

- How to handle D2D?

- Direct access

- Allocate D3D buffer for changed ThebesLayer areas

- Gecko processes draw into it using cairo D2D backend

- Indirect access (for sandboxed content processes etc)

- Remote cairo calls across to the GPU process, creating a command queue that gets posted instead of a new buffer

- Direct access

Important cases on fennec

Will the browser process need to see all layers in a content process, or just a single container/image/screen layer? We always want video in their own layers to decouple framerate from content- and browser-process main-thread work. So at minimum, we need to publish a tree consisting of a layer "below" the video, the video layer, and a layer "above" the video. Need plan for optimal performance (responsiveness and frame rate) for the following cases.

- Panning: browser immediately translates coords of container layer before delivering event to content process. Content (or browser) later uses event to update region painting heuristics.

- Volume rocker zoom: browser immediately sets scaling matrix for container layer (fuzzy zoom). Content (or browser) later uses event to update region painting heuristics.

- Double-tap zoom

- Question: How long does it typically take to determine the zoom target?

- Container layer: can we use layer-tree heuristics to do a fuzzy zoom while content process figures out target? (Better perceived responsiveness.) Might want three-step process: (i) send event to content process, have it determine new viewport (?); (ii) publish that update to browser, browser initiates fuzzy scale; (iii) content process initiates "real" upscale.

- Video: video layers directly connect to the compositor process from off-main-thread in the content process, so that new frames can be published independently of content- and browser-process main threads.

- canvas: Initially can store in SysV shmem. Better would be mapping memory accessible by the video card in a privileged process (e.g. bc-cat), and sharing this mapping to the content process.

- CSS transforms and SVG filters: low priority, assume SW-only in content process for now. CSS transforms might be easy to accelerate, SVG filters may never be (Bas cites problems with the N900's GPU).

- Animations: low priority, not discussed wrt fennectrolysis.

Question: for a given layer subtree, can reasonably guess how expensive the transformation/compositing operations will take in CPU and GPU time? Could use this information for distributed scheduling. Low priority.

Concurrency model for remote layers

Kinda somewhat a lower-level implementation detail, kinda somewhat not.

Assume we have a master process M and a slave process S. M and S maintain their own local layer trees M_l and S_l. M_l may have a leaf RemoteContainer layer R into which updates from S are published. The contents of R are immutable wrt M, but M may freely modify R. R contains the "shadow layer tree" R_s published by S. R_s is semantically a copy of a (possibly) partially-composited S_l.

Updates to R_s are atomic wrt painting. When S wishes to publish updates to M, it sends an "Update(cset)" message to M containing all R_s changes to be applied. This message is processed in its own "task" ("event") in M. This task will (?? create a layer tree transaction and ??) apply cset. cset will include layer additions, removals, and attribute changes. Initially we probably want Update(cset) to be synchronous. (cjones believes it can be made asynchronous, but that would add unnecessary complexity for a first implementation.) (asynchronous layer updates seem counterproductive wrt perf and introduce too many concurrency problems. synchronous seems to be the way to go permanently.) Under the covers (opaque to M), in-place updates will be made to existing R_s layers.

Question: how should M publish updates of R_s to its own master MM? One approach is to apply Update(cset) to R_s, then synchronously publish Update(cset union M_cset) to its master MM. This is an optimization that allows us to maintain copy semantics without actually copying.

A cset C can be constructed by implementing a TransactionRecorder interface for layers (layer managers?). The recorder will observe all mutations performed on a tree and package them into an IPC message. (This interface could also be used for debugging, to dump layer modifications to stdout.)

Video decoding fits into this model: decoders will be a slave S that's a thread in a content process, and the decoders publish updates directly to a master M that's the compositor process. The content and browser main threads will publish special "placeholder" video layers that reference "real" layers in the compositor process.

Implementation

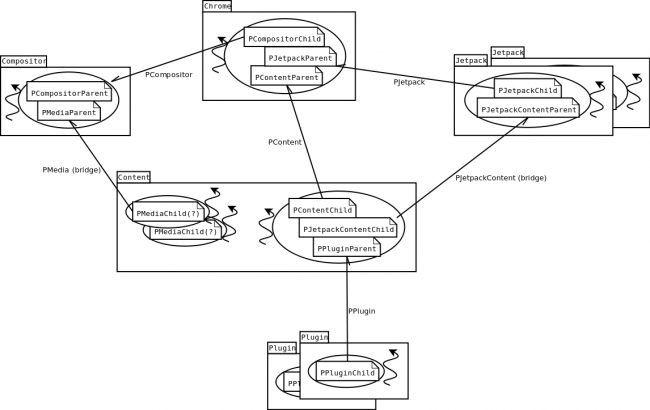

The process architecture for the first implementation will look something like the following.

The big boxes are processes and the circles next to squiggly lines are threads. PFoo refers to an IPDL protocol, and PFoo(Parent|Child) refers to a particular actor.

Layer trees shared across processes may look something like

Like the first diagram, big boxes are processes. The circled item in the Content process is a decoder thread. The arrows drawn between processes indicate the direction that layer-tree updates will be pushed. All updates will be made through a PLayers protocol. This protocol will likely roughly correspond to a "remote layer subtree manager". A ShadowContainerLayer corresponds to the "other side" of a remote subtree manager; it's the entity that receives layer tree updates from a remote process. The dashed line between the decoder thread and the Content main thread just indicates their relationship; it's not clear what Layers-related information they'll need to exchange, if any.

NB: this diagram assumes that a remote layer tree will be published and updated in its entirety. However, it sometimes might be beneficial to partially composite a subtree before publishing it. This exposition ignores that possibility because it's the same problem wrt IPC.

It's worth calling out in this picture the two types of (non-trivial) shared leaf layers: ShmemLayer and PlaceholderLayer. A ShmemLayer will wrap IPDL Shmem, using whichever backend is best for a particular platform (might be POSIX, SysV, VRAM mapping, ...). Any process holding on to a ShmemLayer can read and write the buffer contents within the constraints imposed by Shmem single-owner semantics. This means, e.g., that if Plugin pushes a ShmemLayer update to Content, Content can twiddle with Plugin's new front buffer before pushing the update to Chrome, and similarly for Chrome before pushing Chrome-->Compositor. We probably won't need this feature.

A PlaceholderLayer refers to a "special" layer with a buffer that's inaccessible to the process with the PlaceholderLayer reference. Above, it refers to the video decoder thread's frame layer. When the decoder paints a new frame, it can immediately push its buffer to Compositor, bypassing Content and Chrome (which might be blocked for a "long time"). In Compositor, however, the PlaceholderLayer magically turns into a ShmemLayer with a readable and writeable front buffer, so there new frames can be painted immediately with proper Z-ordering wrt content and chrome layers. Content can arbitrarily fiddle with the PlaceholderLayer in its subtree while new frames are still being drawn, and on the next Content-->Chrome-->Compositor update push, the changes to PlaceholderLayer position/attributes/etc. will immediately take effect.

Strawman PLayers protocol

The rough idea here is to capture all modifications made to a layer subtree during a RemoteLayerManager transaction, package them up into a "changeset" IPC message, send the message to the "other side", then have the other side replay that changeset on its shadow layer tree.

// XXX: not clear whether we want a single PLayer managee or PColorLayer, PContainerLayer, et al.

include protocol PLayer;

// Remotable layer tree

struct InternalNode {

PLayer container;

Node[] kids;

};

union Node {

InternalNode;

PLayer; // leaf

};

// Tree operations comprising an update

struct Insert { Node x; PLayer after; };

struct Remove { PLayer x; };

struct Paint { PLayer x; Shmem frontBuffer; };

struct SetOpaque { PLayer x; bool opaque; };

struct SetClipRect { PLayer x; gfxRect clip; };

struct SetTransform { PLayer x; gfxMatrix tranform; };

// ...

union Edit {

Insert;

Remove;

Paint;

SetOpaque;

SetClipRect;

SetTransform;

// ...

};

// Reply to an Update()

// buffer-swap reply sent in response to Paint()

struct SetBackBuffer { PLayer x; Shmem backBuffer; };

// ...?

union EditReply {

SetBackBuffer;

//...?

};

// From this spec, singleton PLayersParent/PLayersChild actors will be

// generated. These will be singletons-per-protocol-tree roughly

// corresponding to a "RemoteLayerManager" or somesuch

sync protocol PLayers {

// all the protocols with a "layers" mix-in

manager PCompositor or PContent or PMedia or PPlugin;

manages PLayer;

parent:

sync Publish(Node root);

sync Update(Edit[] cset)

returns(EditReply[] reply);

// ... other lifetime management stuff here

state INIT:

recv Publish goto UPDATE;

state UPDATE:

recv Update goto UPDATE;

//... other lifetime management stuff here

};

What comprises a changeset is eminently fungible. For example, some operations may only apply to certain types of layers; IPDL's type system can capture this, if desired.

union ClippableLayer { PImageLayer; PColorLayer; /*...*/ };

struct SetClip { ClippableLayer x; gfxRect clipRect; }

Need lots of input from roc/Bas/jrmuizel here.

Recording modifications made to layer trees during transactions

It's not clear yet what's the best way to implement this. One approach would be to add an optional TransactionObserver member to LayerManagers. The observer could be notified on each layer tree operation. For remoting layers, we would add a TransactionObserver implementation that bundled up modifications into an nsTArray<Edit> per above. A second approach would be for LayerManagers internally, optionally to record all tree modifications, then invoke an optional callback on transaction.End(). For remoting, this callback would transform the manager's internal changeset format into nsTArray<Edit>.

Strawman complete example

TODO: assume processes have been launched per diagram above. Walk through layer tree creation and Publish()ing. Walk through transaction and Update() propagation.

Platform-specific sharing issues

X11

When X11 clients die, the X server appears to free their resources automatically (apparently when the Display* socket closes?). This is generally good but a problem for us because we'd like child processes to conceptually "own" their surfaces, with parents just keeping an "extra ref" that can keep the surface alive after the child's death. We also want a backstop that ensures all resources across all processes are cleaned up when Gecko dies, either normally or on a crash. For Shmem image surfaces, this is easy because "sharing" a surface to another process is merely a matter of dup()ing the shmem descriptor (whatever that means per platform), and the OS manages these descriptors automatically.

Wild idea: if the X server indeed frees client resources when the client's Display* socket closes, then we could have the child send the parent a dup() of the child's Display socket. The parent would close this dup on ToplevelActor::ActorDestroy() (like what happens automatically with Shmems), and the OS would close the dup automatically on crashes.