Abhishek/peragro GSoC2016Proposal

Contents

Personal Details

-

Name : Abhishek Kumar Singh

-

IRC : kanha@freenode.net

-

Location(Time Zone) : India, IST(UTC + 5:30)

-

Education : MS in Computer Science and Engineering, IIIT Hyderabad

-

Email : abhishekkumarsingh.cse@gmail.com, abhishek.singh@research.iiit.ac.in

-

Blog URL : http://abhisheksingh01.wordpress.com/

Peragro AT

Abstract

Implementation of an audio search system for Peragro. An Audio Information Retrieval(AIR) system that would provide client API(s) for audio search and information retrieval. Search will be based on annotated text/descriptions or tags/labels associated with an audio as well as on low and high level features. Focus is to implement an AIR system that provides text based and content based search. Users would be able to perform query through the provided client API(s) and will get list of relevant audios in return.

Benefit to Peragro community

“Search, and you will find”

- Provides client API(s) for text and content based search. Query can be done by providing some text or by submitting a piece of audio.

- Support for advanced queries combining content analysis features and other metadata (tags, etc) including filters and group-by options.

- Support for duplication detection. It helps in finding duplicate entries in the collection.

- Helps in finding relevant audios quickly and efficiently.

Project Details

INTRODUCTION

An idea of implementing a search plugin for Peragro-AT is proposed after having a through discussion with the Peragro community. This would include implementing an Audio Information Retrieval System(AIRS) which would provide audio content search.

Audio Information Retrieval(AIR) can be:

- Text based

- Content based

- Text based AIR

Query can be any text say 'rock' or 'beethoven' etc and the system will search through the text(tags, artist name, description) associated with the audios and will return list of relevant audios corresponding to matched text. It's simple to implement but doesn't help much in audio retrieval as most of the times the audio doesn't contains enough annotations.

- Content based AIR

It bridges the semantic gap when text annotations are nonexistent or incomplete. For example, if one comes to a record store and one only know a tune from a song or an album one want to buy, but not title of music, album, composer, or artist. It is difficult problem to solve for one. On the other hand, sales expertise with vast knowledge of the music can identify tunes hummed by one. Content based audio information retrieval can substitute the vast experience of sales expertise with a vast knowledge of the music.

Benefit :

- Given a piece of audio as a query, Content based AIRS will search through the indexed database of audios return list of audios that are similar to a piece of audio given as query.

- Can detect (duplicates) copy right issues with little tweak.

REQUIREMENTS DURING DEVELOPMENT

- Hardware Requirements

- A modern PC/Laptop

- Graphical Processing Unit(GPU)

- Software Requirements

- Elastic search (storage database)

- elastic-py library

- Kibana (data visualization)

- Python 2.7.x/C

- Scikit, Keras

- Coverage

- Pep8

- Pyflakes

- Vim (IDE)

- Sphinx (documentation)

- Python Unit-testing framework (Unittest/Nosetest)

THE OUTLINE OF WORK PLAN

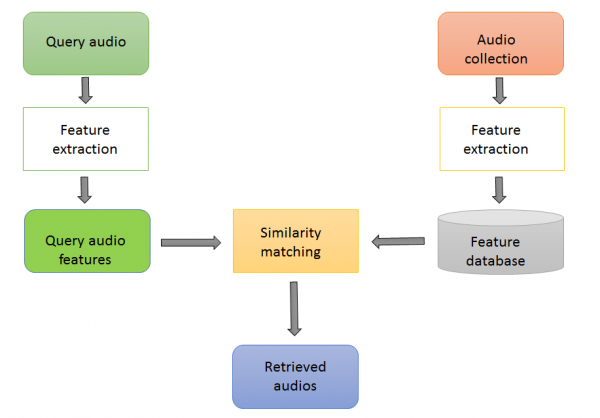

Audio information retrieval framework consists of some basic processing steps:

- Feature extraction

- Segmentation

- Storing feature vectors(indexing)

- Similarity matching

- Returning similar results

Some proposed models for Content based audio retrieval

- Vector Space Model(VSM)

- Spectrum analysis(Fingerprint) model

- Deep Neural network model (Deep learning approach)

Division of Work

Basically, The whole work is divided into four phases :

- Phase I : Feature extraction and index creation

- Phase II : Vector Space Model

- Phase III : Spectrum analysis(Fingerprint) model

- Phase IV : Deep Neural Network Model (Deep learning approach)

Phase I : Feature extraction and index creation

Extraction of features of audio collections and index creation for extracted features will be done in this phase. These features could be annotated texts, tags, descriptions, labels etc. It would also include low level and high level metadata.

A dataset needs to created by downloading open sourced collections of audios from hosting source. Freesound seems to be good platform for downloading and creating datasets. They also provides annotated data and low level and high level descriptors along with videos. These could be used in building and testing our search system.

A demo code for previewing audio from freesound.

"""

Demo code to show structure of freesound

client api(s).

"""

import freesound, sys,os

c = freesound.FreesoundClient()

c.set_token("<your_api_key>","token")

results = c.text_search(query="dubstep",fields="id,name,previews")

for sound in results:

sound.retrieve_preview(".",sound.name+".mp3")

print(sound.name)

Features from the audios will be extracted from the existing Peragro AT library and using free and open source libraries like librosa and PyAudioAnalyser. These features will be indexed in the elastic search database.

A sample code to store indexes in elastic search

"""

A Demo code to show indexing in elastic search

creating index for some song collections.

It indexes details about a song; singer, title,

tags, release, genre, length, awesomeness

"""

import elasticsearch

es = elasticsearch.Elasticsearch() # use default of localhost, port 9200

es.index(index='song_details', doc_type='song', id=1, body={

'singer': 'Ellie Goulding',

'title': 'love me like you do',

'tags': ['cherrytree', 'interscope', 'republic'],

'release': '7 January 2015',

'genre': 'electropop',

'length': 4.12,

'awesomeness': 0.8

})

es.index(index='song_details', doc_type='song', id=2, body={

'singer': 'Eminem',

'title': 'love the way you lie',

'tags': ['Aftermath', 'Interscope', 'shady'],

'release': '9 August 2010',

'genre': 'hip hop',

'length': 4.22,

'awesomeness': 0.6

})

es.index(index='song_details', doc_type='song', id=3, body={

'singer': 'Arijith Singh',

'title': 'Tum hi ho',

'tags': ['romantic', 'movie song track'],

'release': '2 April 2013',

'genre': 'romantic',

'length': 4.15,

'awesomeness': 0.95

})

# searching for song with text as 'tum hi'

es.search(index='song_details', q='tum hi')

Once we have created indexes and stored in elastic search database, search based on texts can easily be done as elastic search support Lucene based search.

Some more functionalities which elastic search provides:

- Distributed, scalable, and highly available

- Real-time search and analytics capabilities

- Sophisticated RESTful API

Hence, using elastic search for storing index is a good choice. Data stored in the elastic search will further be used to create different visualization using Kibana. It comes very handy to understand the behavior of data once we visualize it.

Reason for using Kibana

Kibana provides the following functionality:

- Flexible analytics and visualization platform

- Real-time summary and charting of streaming data

- Intuitive interface for a variety of users

- Instant sharing and embedding of dashboards

Phase II : Vector Space Model(VSM)

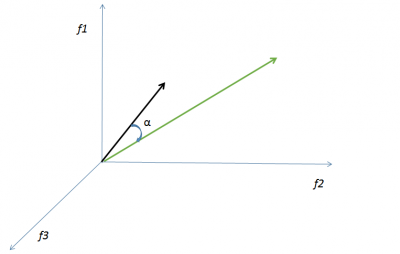

This is a basic yet effective model. The idea is to represent every audio entity in vector form in the feature space.

Given a query audio, learn the vector representation of it and then find the similarity between the query vector and indexed audio vectors. More the similarity more is its relevance. Cosine similarity measure can be used to calculate relevance score. Features for audio could be tempo, bpm, average volume, genre, mood, fingerprint etc.

Phase III : Spectrum analysis(Fingerprint) model

This relies on fingerprinting music based on spectrogram.

Basic steps of processing

- Preprocessing step: Includes fingerprinting comprehensive collections of music and storing fingerprint data in database (indexing)

- Extracting fingerprint of music(m) piece used as query

- Matching fingerprint of m against the indexed fingerprints

- Returning matched musics in relevance order

Advantage

It works on very obscure songs and will do so even with extraneous background noise

Implementation Details

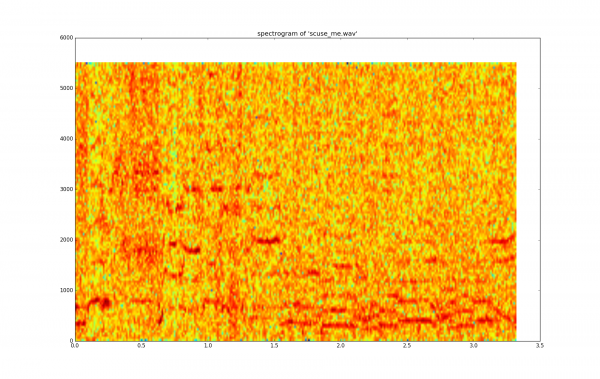

Idea here is to think of a piece of music as time frequency graph also called spectrogram. This graph has three axis. Time on x-axis, frequency on y-axis and intensity on z-axis. A line horizontal line represents a continuous pure tone, while vertical line represents sudden rise (burst) white noise.

sample wave file: scuse me

"""Spectrogram image generator for a given audio wav sample.

It is a visual representation of spectral frequencies in sound.

horizontal line(x axis) is time, vertical line(y axis) is frequency

and color represents intensity.

"""

import os

import wave

import pylab

def graph_spectrogram(wav_file):

sound_info, frame_rate = get_wav_info(wav_file)

pylab.figure(num=None, figsize=(19, 12))

pylab.subplot(111)

pylab.title('spectrogram of %r' % wav_file)

pylab.specgram(sound_info, Fs=frame_rate)

pylab.savefig('spectrogram.png')

def get_wav_info(wav_file):

wav = wave.open(wav_file, 'r')

frames = wav.readframes(-1)

sound_info = pylab.fromstring(frames, 'Int16')

frame_rate = wav.getframerate()

wav.close()

return sound_info, frame_rate

if __name__ == '__main__':

wav_file = 'scuse_me.wav'

graph_spectrogram(wav_file)

Points of interest are the points with 'peak intensity'. The algorithm keeps track of the frequency and the amount of time from the beginning of the track for each of these peak point. Fingerprint of the song is generated using these information.

Details of algorithm

Read the audio/music data as a normal input stream. This data is time-domain data. As we need to use spectrum analysis instead of direct time-domain data. Using Discrete Fourier Transform (DFT) we will convert the time-domain data to frequency domain so that it can be of use. The problem here is that, in frequency domain data we loose track of time. Hence, to overcome this problem we divide whole data into chunks of data and will transform just this bit of information. Basically, we will be using a small window size. For each of these small window we already know the time.

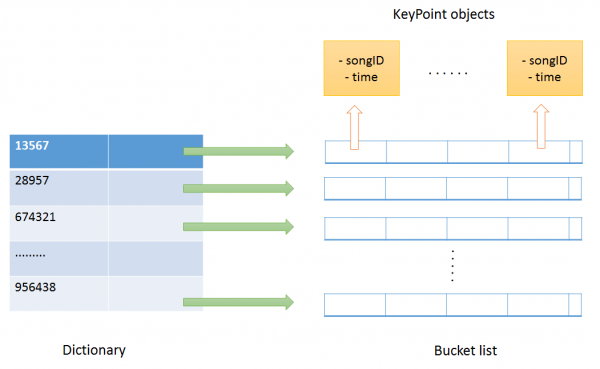

Using Fast Fourier Transform(FFT) on all data chunks we get a list of all the data about frequencies. Now, our goal would be to find interest points also called key music points to save these points on hash and try to match on them against the indexed database. It would be efficient since average lookup time for a hash table is O(1), i.e. constant. Each line of spectrum represents data for a particular window. We would be taking certain ranges say 40-80, 80-120, 120-180, 180-300. Our goal is to take points with highest magnitude from these ranges for each windowed data. These are the interest points or key points.

Next step is to use these key points for hashing and generate index database. Key points obtained form each window will be used to generate a hash key. In the dictionary, a hash key is used to obtain the bucketlist stored as value. Index of the bucketlist is songID and at each index value would be details stored in KeyPoint object.

class KeyPoint:

def __init__(self, time, songID):

self.time = time

self.songID = songID

Using the above mentioned technique we can create index for the audio/music collections. Given a query audio we find fingerprint(contains Keypoints and time details) of that audio and will match against the indexed database to find similar audios. Important thing to keep in mind is that we are not just matching key points but time too. We must overlap the timing. We can subtract the current time in our recording (from query fingerprint) with the time of the hash-match (from indexed KeyPoint objects). This difference is stored together with the song ID. Because this offset, this difference, tells us where we possibly could be in the song. When we have gone through all the hashes from our recording we are left with a lot of song id’s and offsets. More the number of hashes with matching offsets more relevant is the song.

Reference Paper: http://www.ee.columbia.edu/~dpwe/papers/Wang03-shazam.pdf

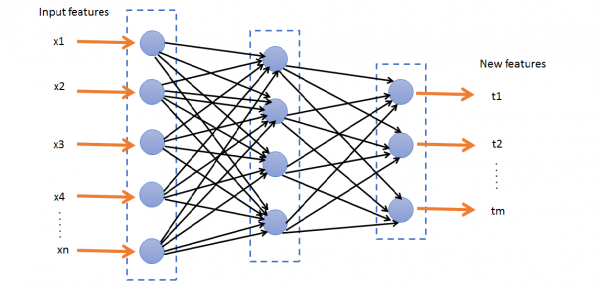

Phase IV : Deep Neural Network Model (Deep learning Approach)

This method helps in learning features of a audio automatically. It's an unsupervised approach to learn important features. In deep neural network each layer extracts some abstract representation of the audio. The idea is through each computation layer the system is able to learn some representation of the audio. Initial sets of inputs is passed through the input layer and computations are done at the hidden layers. After passing some hidden layer we learn new feature representation of initial input features. These new learned features vectors will be the representations of audios. Now, we could use machine learning algorithm on these features to do various classification and clustering job.

In feature space, the audio will be close to each other have some kind of similarity notion between them.

Advantage

- Saves the effort of learning features of audio (meta data extraction and manual labelling)

- It can be used to find similar audios/music. Which can be useful in content based search and by using user information it can be used to recommend songs/audios.

- It can be used to detect duplicate songs. It generally happens that two songs ends up having different Ids but are indeed same songs.

Deliverables

An Audio retrieval system that supports following features

- Client API(s) for searching.

- Support for text based search. User can search relevant audios by providing tag/label or some keywords.

- Support for content based search. It will show similar audios based on content provided by user. Query can be done by providing a piece of audio also.

- Fingerprinting and similarity features will help in duplication detection.

- Support for advanced queries. User would be able to filter and group results.

- Visualization support for the data powered by Kibana.

- Proper documentation on the work for users and developers.

A sample code showing an estimate of the the API(s) provided at the end.

"""

A sample to show some of the API(s) provided by

peragro search system and its usage.

"""

from peragro import search

# create a search client

c = search.searchClient()

# setting api key for the client

# this may not be a part, depends on

# peragro community

c.set_token("<your_api_key>","token")

# get sound based on id

sound = c.get_sound(108)

# retrieve relevant results for a query

results = c.text_search(query="hip hop")

# fields parameter allows to specify the information

# you want in the results lis

results = c.text_search(query="hip hop",fields="id,name,previews")

# applying filter

sounds = c.text_search(query="hip hop", filter="tag:loop", fields="id,name,url")

# search sounds using a content-based descriptor target and/or filter

sounds = c.content_based_search(target="lowlevel.pitch.mean:220",

descriptors_filter="lowlevel.pitch_instantaneous_confidence.mean:[0.8 TO 1]",

fields="id,name,url")

# content based search using given audio piece of music

sounds = c.content_based_search(audiofile="sample.mp3")

# Combine both text and content-based queries.

sounds = c.combined_search(target="lowlevel.pitch.mean:220", filter="single-note")

# returns a confidence score for the possiblity of two

# audios being exactly same

dup_score = c.get_duplicate_score(audiofile1, audiofile2)

# returns True if duplicate else False

check = c.is_duplicate(audiofile1, audiofile2)

Project Schedule

1. Requirements gathering..........................................................................................1 week

- 1. Writing down requirements of the user.

- 2. Setting up test standards.

- 3. Writing test specification to test deployed product.

- 4. Start writing developer documentation.

- 5. Learn more about elastic search and kibana

- 1. Getting dataset to work on.

- 2. Writing code to extract features from the dataset.

- 3. Writing automated unit test to test written code.

- 4. Adding to developer documentation.

- 1. Writing code for data visualization.

- 2. Writing automated unit test to test written code.

- 3. Adding to developer documentation.

- 1. Writing code to implement VSM.

- 2. Writing automated unit test to test written code.

- 3. Adding to developer documentation.

- 1. Writing code for fingerprinting audio

- 2. Writing code for storing fingerprints in elastic search database

- 3. Writing automated unit test to test written code.

- 4. Adding to developer documentation.

- 1. Writing code for DNN. Try out visualization to get some sense out of data

- 2. use state of art ML algorithms on new features

- 3. Validation of POC

- 4. Adding details to documentation

- 1. Prepare user documentation.

- 2. Implement nice-to-have features.

- 3. Improve code readability and documentation.

Task to be done before midterm [23rd May, 2016 - 23th June, 2016]

1. Requirements gathering

2. Feature extraction and indexing

3. Data visualization

4. starting work of VSM

Task to be done after midterm [25th June, 2016 - 20th August, 2016]

4. Implementing Vector Space Model

5. Implement spectrum Analysis Model

6. Implement Deep Neural Network Model

7. Buffer Time

Time

I will be spending 45 hours per week for this project.

Summer Plans

I am a first year masters student and my final exams will be over by the end of April, 2016. Then we have summer vacation till end of July. As, I am free till then so, I will be spending much of my time towards my project.

Motivation

I am a kind of person who always aspires to learn more and explore different areas. I am also an open source enthusiast who likes exploring new technologies. GSoC(Google Summer of Code) provides a very good platform for students like me to learn and show case their talents by coming up with some cool application at the end of summer.

Bio

I am a first year MS in Computer Science and Engineering student at IIIT Hyderabad, India. I am passionate about machine learning, deep learning, information retrieval and text processing and have keen interest in Open Source Software. I am also a research assistant at Search and Information Extraction Lab (SIEL) at my university.

Experiences

My work includes information retrieval for text based systems. During my course work I created a search engine on whole Wikipedia corpus from scratch. I have also been using machine learning and have dived into deep learning concepts for representation learning. Currently, I am working on learning efficient representations of nodes in social network graph.

I have sound knowledge of programming language namely Python, Cython, C, C++ etc. I have good understanding of python's advanced concepts like descriptors, decorators, meta-classes, generators and iterators along with other OOPs concepts. Also, I have contributed to some of the open source organizations like Mozilla, Fedora, Tor, Ubuntu before. As a part of GSoc 2014 project I worked for the Mars exploration project of Italian Mars Society. It was a virtual reality project where I implemented a system for full body and hand gesture tracking of astronauts. This allows astronauts in real world to control their avatar in virtual world through their body gestures. Details of this project can be found at:

concept idea : https://wiki.mozilla.org/Abhishek/IMS_Gsoc2014Proposal

IMS repo : https://bitbucket.org/italianmarssociety/

Details of some of my contributions can be found here

Github : https://github.com/AbhishekKumarSingh

Bitbuket : https://bitbucket.org/abhisheksingh

My CV : https://github.com/AbhishekKumarSingh/CV/blob/master/abhishek.pdf